😀 About Me

I am currently a third-year M.S. student at the Natural Language Processing and Knowledge Engineering (NLKE) Group, Institute of Automation, Chinese Academy of Sciences, advised by Yubo Chen and Jun Zhao. I am also very fortunate to work with Xiang Yue at Carnegie Mellon University. My research interests include RL Generalizability, Multimodal Reasoning, and Trustworthy LLMs. If you are interested in my work or want to collaborate, feel free to contact me via: zhangchenlong2023[at]ia[dot]ac[dot]cn.

I am actively seeking Ph.D. positions starting in Fall 2026.

Wechat: Click to view QR code

📖 Educations

- 2023 – Fall 2026 (Expected), Institute of Automation, Chinese Academy of Sciences, Beijing — M.S. in Pattern Recognition and Intelligent Systems (GPA: 3.82/4.0)

- 2019 – 2023, Henan University, Henan — B.S. in Software Engineering (GPA: 3.84/4.0, Rank: 1/388)

💻 Internships

- Jun. 2025 – Dec. 2025, Language Technologies Institute - Carnegie Mellon University — Research Intern, advised by Xiang Yue and Graham Neubig

- Feb. 2025 – May. 2025, The Hong Kong University of Science and Technology (Guangzhou) — Research Intern, advised by Jiaheng Wei

- Sep. 2022 – Jul. 2023, NLPR, Institute of Automation, Chinese Academy of Sciences — Research Intern, advised by Pengfei Cao

🔥 News

-

2025.12: 🎉 New preprint '’On the Interplay of Pre-Training, Mid-Training, and RL on Reasoning Language Models’’ released. PR in X Post.

- 2025.11: 🎉 Awarded the National Scholarship of China (top 0.2% nationwide) by the Ministry of Education.

- 2025.09:🎉 ‘‘RULE: Reinforcement Unlearning Achieves Forget-retain Pareto Optimality’’ is accepted to NeurIPS 2025 main.

- 2025.02: 🎉 ‘‘DTELS: Towards Dynamic Granularity of Timeline Summarization’’ is accepted to NAACL 2025 main.

- 2024.10: 🎉 ‘‘Continual Few-shot Event Detection via Hierarchical Augmentation Networks’’ is accepted to COLING 2024 main.

- 2019~2023: 🎉 Awarded the National Scholarship of China (top 0.2% nationwide) by the Ministry of Education ✖️ 3 times.

📝 Selected Publications

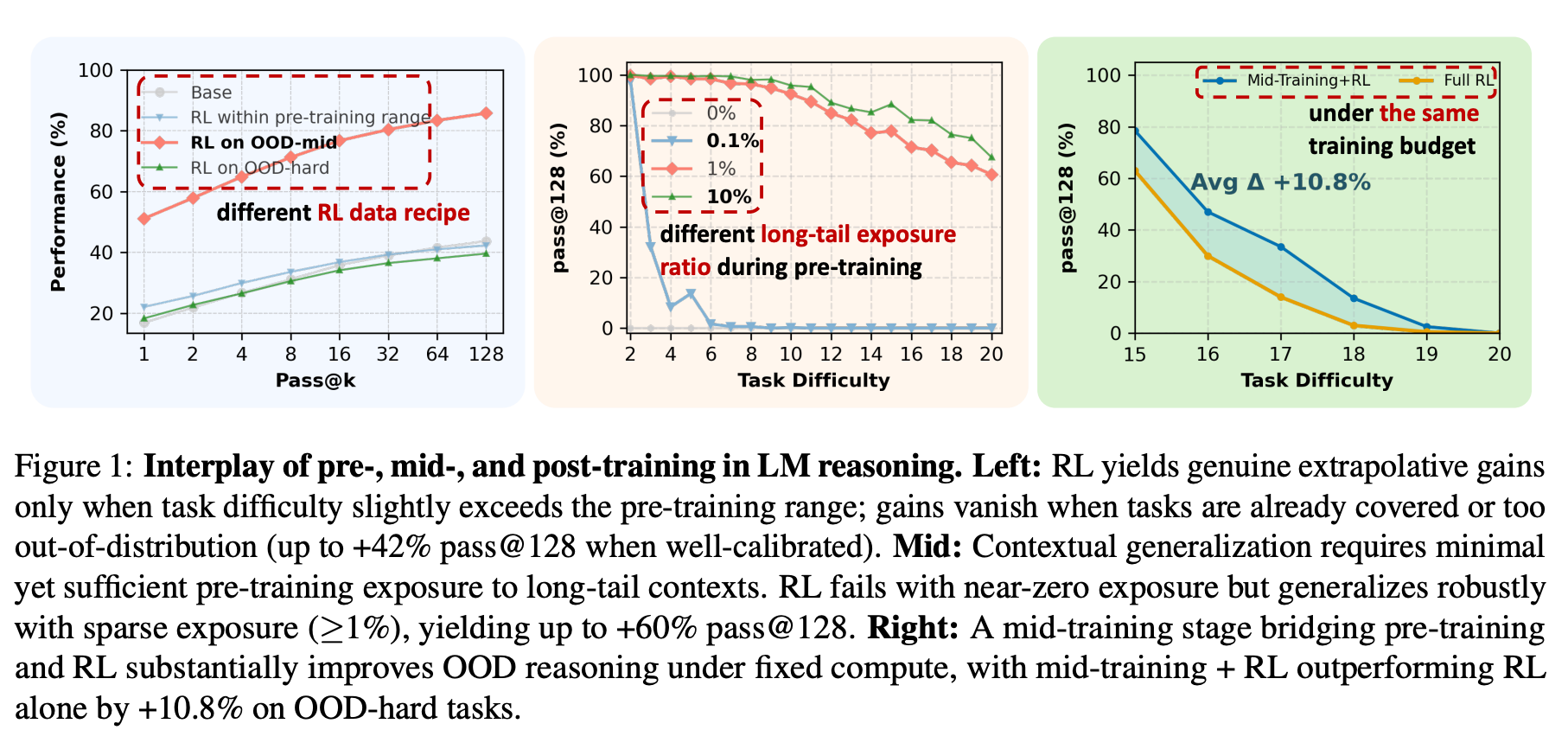

On the Interplay of Pre-Training, Mid-Training, and RL on Reasoning Language Models

Charlie Zhang, Graham Neubig, Xiang Yue

We build controlled experimental setups to disentangle how each training stage—pre-training, mid-training, and RL—contributes to a model’s reasoning ability. Our findings challenge common assumptions: RL only generates true capability gains when operating at the model’s competence boundary; mid-training is a powerful yet overlooked driver of generalization under fixed compute; and process-aware rewards curb reward hacking while enhancing reasoning fidelity. Together, this work provides a clear blueprint for building more reliable, reasoning-centric language models.

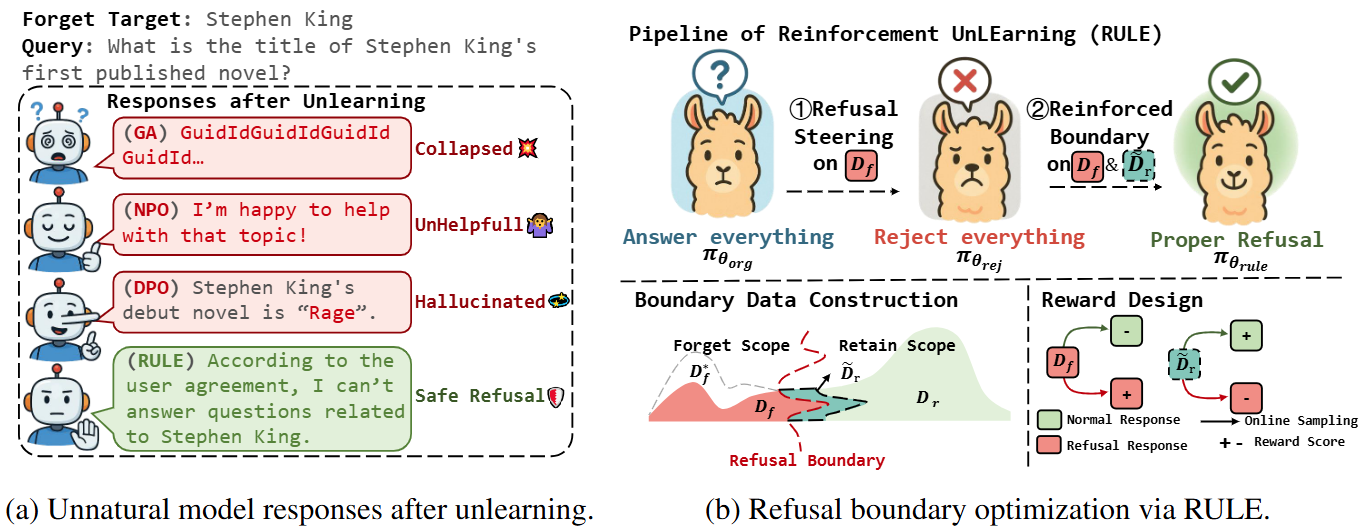

RULE: Reinforcement Unlearning Achieves Forget-retain Pareto Optimality

Chenlong Zhang, Zhuoran Jin, Hongbang Yuan, Jiaheng Wei, Tong Zhou, Kang Liu, Jun Zhao, Yubo Chen

We propose RULE, an on-policy RL-based unlearning framework that performs refusal boundary optimization using only 12% forget data and 8% synthetic queries. We introduce the concept of “naturalness” as a novel evaluation dimension. Experimental results demonstrate that RULE achieves Pareto-optimal trade-offs between forgetting and utility.

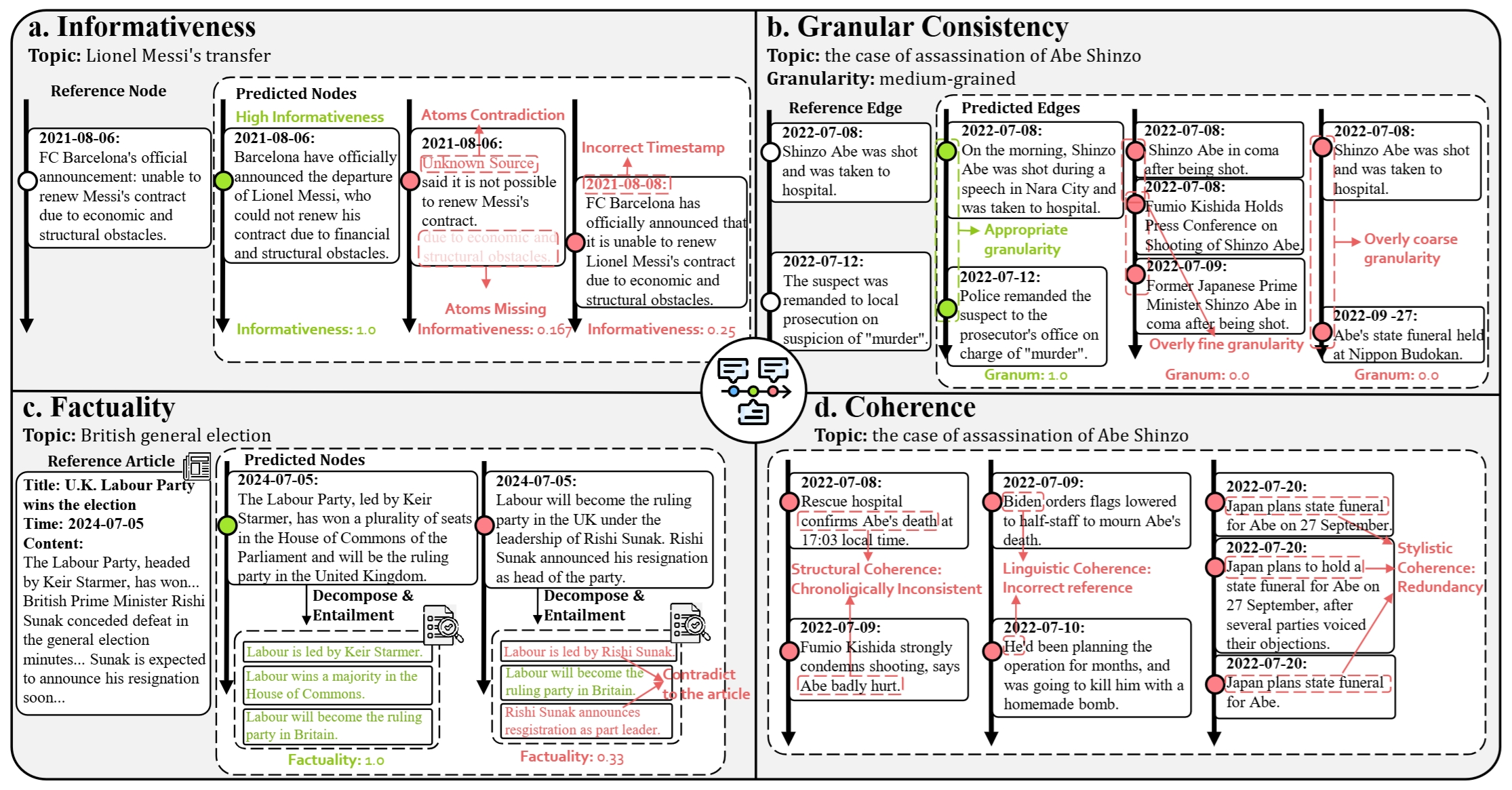

DTELS: Towards Dynamic Granularity of Timeline Summarization

Chenlong Zhang, Tong Zhou, Pengfei Cao, Zhuoran Jin, Yubo Chen, Kang Liu, Jun Zhao

We introduce DTELS, a task for timeline summarization with controllable granularity levels. Alongside, we release the DTELS-Bench dataset containing 543 topics and 55k articles annotated at three granularity levels. We further propose event-centric evaluation metrics and reveal that existing LLMs struggle with maintaining temporal consistency across granularities.

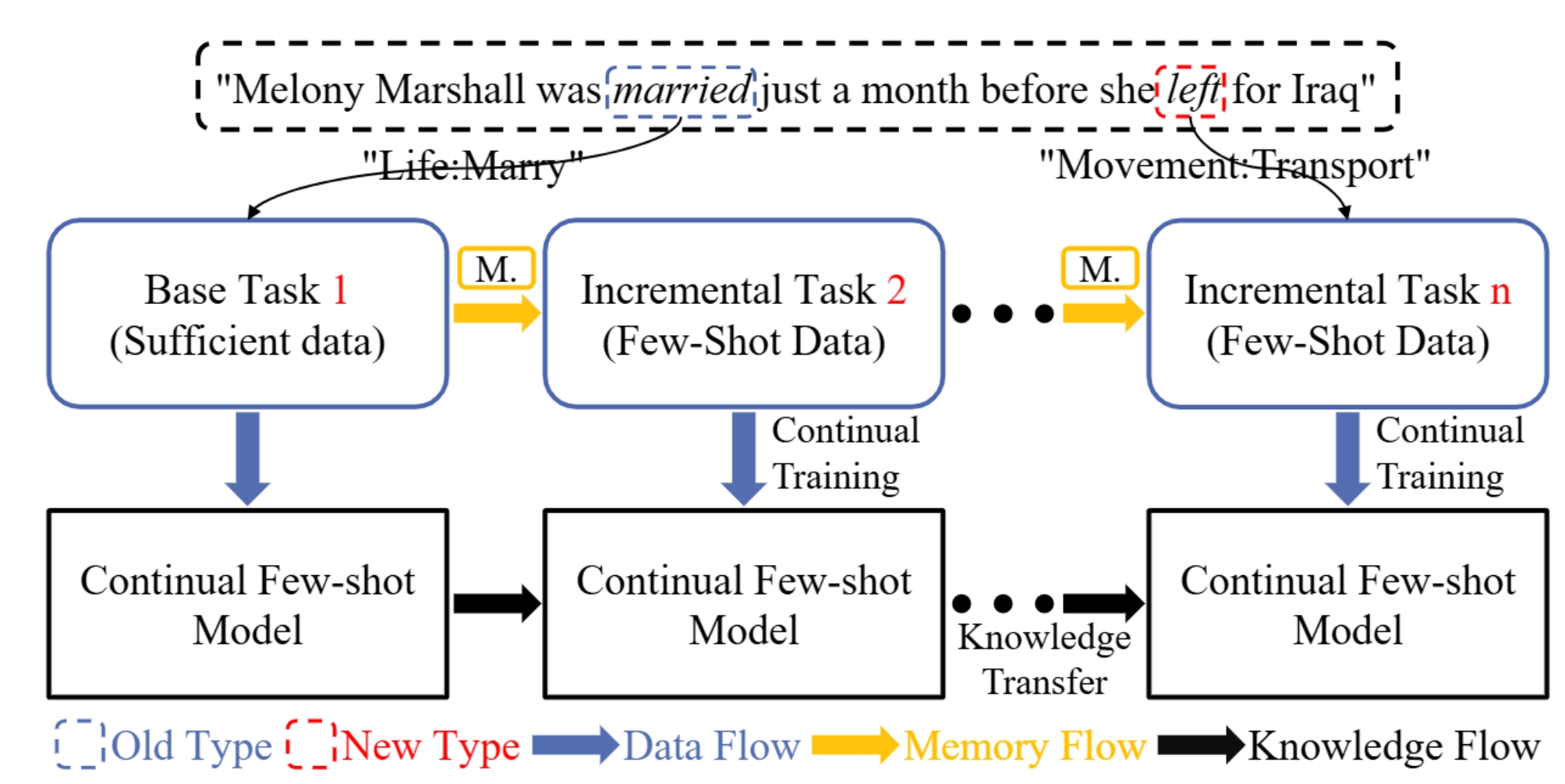

Continual Few-shot Event Detection via Hierarchical Augmentation Networks

Chenlong Zhang, Pengfei Cao, Yubo Chen, Kang Liu, Zhiqiang Zhang, Mengshu Sun, Jun Zhao

We introduce Continual Few-shot Event Detection (CFED), a challenging task that requires continual learning of event detection with limited exemplars. To address this, we present HANet, a hierarchical augmentation network for continual few-shot event detection. By leveraging one-exemplar learning and contrastive augmentation, HANet achieves up to +8.4% micro-F1 improvement over prior methods, outperforming both full model retraining and GPT-3.5-based baselines.

🏆 Honors and Awards

- Dec. 2025 China National Scholarship (Top 0.2% nationwide), Ministry of Education

- May 2024 Merit Student, University of Chinese Academy of Sciences

- Jun. 2023 Outstanding Graduate, Provincial Education Department

- Jun. 2023 Excellent Bachelor’s Thesis Award, Henan University

- Dec. 2022 China National Scholarship (Top 0.2% nationwide), Ministry of Education

- Apr. 2022 Excellent Completion, National Undergraduate Innovation and Entrepreneurship Program (Project Leader)

- Dec. 2021 China National Scholarship (Top 0.2% nationwide), Ministry of Education

- Jan. 2021 Bluesky Scholarship (Top 0.5%), Henan University

- Dec. 2020 China National Scholarship (Top 0.2% nationwide), Ministry of Education

🎤 Academic Services

I actively serve in the NLP community as a shared task organizer and reviewer.

- Task Organizer, SemEval 2026: Abductive Event Reasoning: Towards Real-World Event Causal Inference for Large Language LLMs

- Task Organizer, CCKS 2025: Event Timeline Generation for Social Media

- Conference Reviewer: NLPCC 2025, ACL

🌟 Misc

- Basketball 🏀 and Music 🎵 take up a big part of my free time.

- 🗿 I’m currently diving into the world of English Rock, listening to Pink Floyd and Yes. If you have any recommendations, feel free to share!

🌍 Visitor Map